Optimal Control for AFM State Preparation

1. Introduction

The following notebook gives an overview of the application of an optimal control method using Pulser. The announced objective is to achieve the preparation of the well-known antiferromagnetic state - see tutorial Preparing an AF state in the Ising model - on a squared configuration of neutral atoms using optimised pulses smoother than the usual ramps. We define by AF state an equal mixture of the two “checkerboard” spin states.

We begin by importing some basic modules as well as an optimisation function based on Gaussian processes gp_minimize. This notebook uses the scikit-optimize package, which has to be installed beforehand.

[1]:

import time

import numpy as np

import matplotlib.pyplot as plt

import qutip

from skopt import gp_minimize

from pulser import Pulse, Sequence, Register

from pulser_simulation import QutipEmulator

from pulser.waveforms import InterpolatedWaveform

from pulser.devices import AnalogDevice

2. System and Parameters

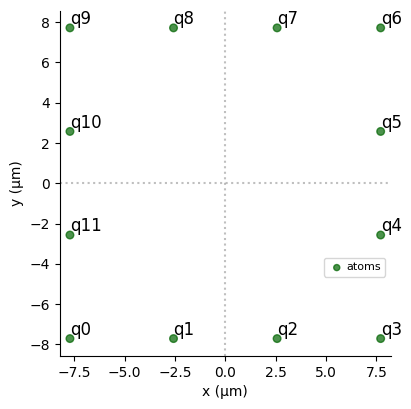

The parameters which will bound the parameter space during the optimisation are chosen as in Preparing an AF state in the Ising model. A square register of \(4\times 4\) atoms with a central \(2\times 2\) square of atoms missing is created. It is equivalent to a chain of \(12\) atoms but avoids edge effects which alter the system dynamics.

[2]:

# Parameters in rad/µs and ns

T = 1000 # duration

U = 2 * np.pi * 5.0

Omega_max = 0.5 * U

delta_0 = -1.0 * U

delta_f = 1.0 * U

R_interatomic = AnalogDevice.rydberg_blockade_radius(Omega_max) / 1.2

print(f"Interatomic Radius is: {R_interatomic}µm.")

N_side = 4

coords = (

[R_interatomic * np.r_[x, 0] for x in range(N_side - 1)]

+ [R_interatomic * np.r_[N_side - 1, y] for y in range(N_side - 1)]

+ [

R_interatomic * np.r_[N_side - 1 - x, N_side - 1]

for x in range(N_side - 1)

]

+ [R_interatomic * np.r_[0, N_side - 1 - y] for y in range(N_side - 1)]

)

reg = Register.from_coordinates(coords, prefix="q")

N = len(coords)

N_samples = 1000

reg.draw()

Interatomic Radius is: 5.140775069514571µm.

A channel is declared on the AnalogDevice in order to check the feasibility of the pulses. If the extreme values of \(\Omega_{max}\), \(\delta_0\) and \(\delta_f\) exceed the thresholds proposed by AnalogDevice, a restriction is required. The best case scenario is to take extreme values which coincide with those of the device (within a certain tolerance), especially for \(\delta_0\) and \(\delta_f\) since they parameterise the adiabatic evolution of the system.

[3]:

seq = Sequence(reg, AnalogDevice)

seq.declare_channel("ising", "rydberg_global")

tol = 1e-6

max_amp = seq.declared_channels["ising"].max_amp * (1 - tol)

max_det = seq.declared_channels["ising"].max_abs_detuning * (1 - tol)

Omega_max = min(max_amp, Omega_max)

delta_0 = np.sign(delta_0) * min(max_det, abs(delta_0))

delta_f = np.sign(delta_f) * min(max_det, abs(delta_f))

print(Omega_max / U, np.round(delta_0 / U, 2), delta_f / U)

0.3999996 -1.0 1.0

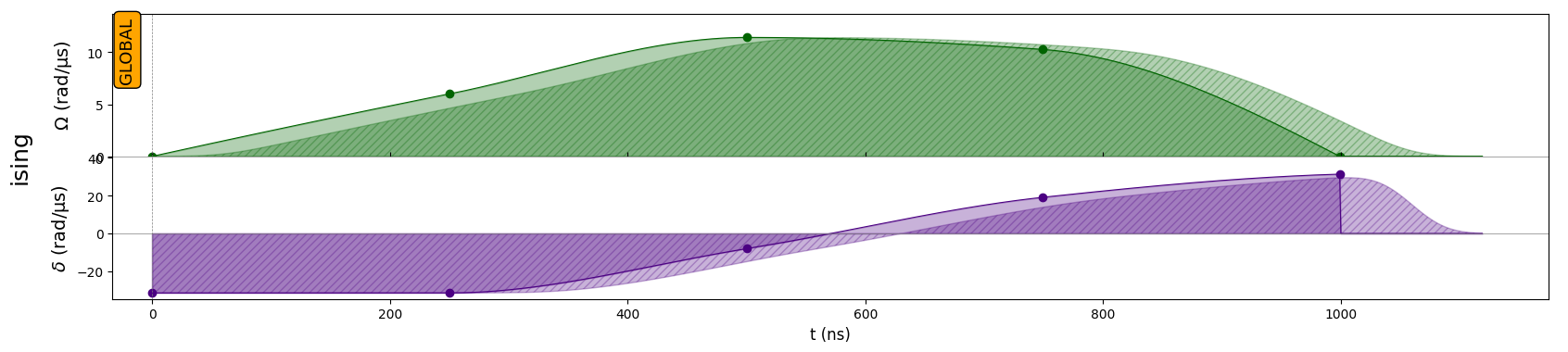

Interpolated pulses

The parameters fed to the optimisation algorithm must uniquely define \(\Omega\) and \(\delta\). Interpolated pulses, once the interpolation method has been set, can be fully described by \(2m\) parameters, which are \(\{\Omega(t_i),\delta(t_i), i\in[1,m]\}\) with \(t_i=T\times i/(m+1)\). The larger \(m\) is, the more complex the pulse behaviour could be but also the more resources are needed since the parameters space is expanding. Here, the interpolation is done through

the InterpolatedWaveform class, which does monotonic cubic splines using PchipInterpolator by default.

We create a random instance of interpolated pulse using Pulser.

[4]:

# Size of the parameter space

m = 3

# Random instance of the parameter space

amp_params = np.random.uniform(0, Omega_max, m)

det_params = np.random.uniform(delta_0, delta_f, m)

We define an interpolation function which takes as argument a set of parameters and returns the interpolated pulse associated. Here, its starting and ending values are fixed such that \(\Omega (0) = \Omega(T) = 0\), \(\delta(0) = \delta_0\) and \(\delta(T) = \delta_f\).

[5]:

def create_interp_pulse(amp_params, det_params):

return Pulse(

InterpolatedWaveform(T, [1e-9, *amp_params, 1e-9]),

InterpolatedWaveform(T, [delta_0, *det_params, delta_f]),

0,

)

Using Pulser, we define a Sequence on the AnalogDevice and add the pulse to it. We can also visualize it.

[6]:

seq = Sequence(reg, AnalogDevice)

seq.declare_channel("ising", "rydberg_global")

seq.add(create_interp_pulse(amp_params, det_params), "ising")

seq.draw()

If we simulate the effect of the sequence on the system we obtain the final state. With each set of parameters, a state of the system can be obtained.

[7]:

simul = QutipEmulator.from_sequence(seq)

results = simul.run()

final = results.get_final_state()

Once the first optimised pulse shapes are obtained, it is worth adding constraints to the interpolated shapes such as strict growth or derivative limitation to further guide the optimisation.

Structure factor

In order for the optimisation algorithm to distinguish between these states and thus to browse the parameter space in search of an optimal shape of pulse, we need to assign a score to each set based on the state it allows us to reach. With the algorithm set to look for minimum, the AF state should have a score of 0 and all other states strictly positive score. The score will be defined based on the Néel structure factor \(S_{Néel}\) defined in the tutorial Preparing an AF state in the Ising model since we want an observable accessible by the processor.

[8]:

def occupation(j, N):

up = qutip.basis(2, 0)

prod = [qutip.qeye(2) for _ in range(N)]

prod[j] = up * up.dag()

return qutip.tensor(prod)

def get_corr_pairs(k, N):

corr_pairs = [[i, (i + k) % N] for i in range(N)]

return corr_pairs

def get_corr_function(k, N, state):

corr_pairs = get_corr_pairs(k, N)

operators = [occupation(j, N) for j in range(N)]

covariance = 0

for qi, qj in corr_pairs:

covariance += qutip.expect(operators[qi] * operators[qj], state)

covariance -= qutip.expect(operators[qi], state) * qutip.expect(

operators[qj], state

)

return covariance / len(corr_pairs)

def get_full_corr_function(reg, state):

N = len(reg.qubits)

correlation_function = {}

for k in range(-N // 2, N // 2 + 1):

correlation_function[k] = get_corr_function(k, N, state)

return correlation_function

def get_neel_structure_factor(reg, state):

N = len(reg.qubits)

st_fac = 0

for k in range(-N // 2, N // 2 + 1):

kk = np.abs(k)

st_fac += 4 * (-1) ** kk * get_corr_function(k, N, state)

return st_fac

We will use one of Pulser’s methods (sample_final_state() which belongs to the CoherentResults class) to sample from the final state of a simulation’s results, wrapped in a function that displays those bitstrings above a certain threshold min_p:

[9]:

def proba_from_state(results, min_p=0.1):

sampling = results.sample_final_state(N_samples=N_samples)

return {

k: f"{100*v/N_samples}%"

for k, v in sampling.items()

if v / N_samples > min_p

}

\(S_{Néel}\) should reach its maximum for the AF state. The obtained value will allow to normalise the score.

[10]:

# Create antiferromagnetic state as the superposition of the two checkerboard patterns:

AF1 = qutip.tensor([qutip.basis(2, k % 2) for k in range(N)])

AF2 = qutip.tensor([qutip.basis(2, (k + 1) % 2) for k in range(N)])

AF_state = (AF1 + AF2).unit()

t1 = time.process_time()

S_max = get_neel_structure_factor(reg, AF_state)

print("S_Neel(AF state) =", S_max)

t2 = time.process_time()

print("computed in", (t2 - t1), "sec")

S_Neel(AF state) = 13.0

computed in 0.13257804699999998 sec

Score function

The score function thus wraps the previous functions and assigns a positive score to each instance of parameters.

[11]:

def score(params):

seq = Sequence(reg, AnalogDevice)

seq.declare_channel("ising", "rydberg_global")

seq.add(create_interp_pulse(params[:m], params[m:]), "ising")

simul = QutipEmulator.from_sequence(seq, sampling_rate=0.5)

results = simul.run()

sampling = results.sample_final_state(N_samples=N_samples)

sampled_state = sum(

[

np.sqrt(sampling[k] / N_samples) * qutip.ket(k)

for k in sampling.keys()

]

)

F = get_neel_structure_factor(reg, sampled_state) / S_max

return 1 - F

[12]:

score(np.r_[amp_params, det_params])

[12]:

0.6457977179487179

3. Optimisation

Bayesian search

Bayesian optimisation method has two keywords: model and decide.

It consists of both a statistical model that simulates the unknown landscape function \(F\) and a decision maker, the acquisition function \(a\), which indicates where the next evaluation will be most likely to enhance optimization. Two distinct stages are carried out. In the supervised training, \(F\) is surveyed according to an initial space-filling pattern. For small-sized spaces, the probing points \(\{x_i\}\) can be formed into a discrete grid efficiently covering the entire search space. However, as the number of dimensions grows, it becomes less resource-consuming to conduct a uniformly random sample of \(n\) points.

A prior surrogate model of \(F\) is established knowing only its values \(\{F(x_i)\}_{1:n}\) at those \(n\) points. Then, in the research part, each new point of a remaining budget \(M-n\) is iteratively selected and used to update the model. That model provides a posterior probability distribution \(f\), which approximates \(F\) at every \(x\). Using Gaussian processes for the modelling enables to get normally distributed \(f(x)\), fully described by only two parameters: a mean and a variance.

At each step of the algorithm, once the landscape has been approximated over the search space, the acquisition function \(a(x)\) can be updated, being completely determined by the current distribution \(f(x)\). \(a\) relates to how desirable evaluating \(F\) at \(x\) is expected to be and its straightforward construction makes it a relatively inexpensive function to evaluate and thus to optimise. By focusing alternatively on exploration and exploitation, \(a\) helps to locate the next optimal point to query, \(x^{*}\). It might seem that we just traded one optimization problem for another, but the call cost of \(a\) is usually derisory compared to the black box one. \(F(x^{*})\) is then added to the known values of \(F\) in order to refine the next model.

After \(M\) calls to \(F\), the Bayesian algorithm outputs \(x_{opt}\), the most “useful” point for the experiment, i.e. the supposed global minimum of \(F\) or the point with the smallest mean in case of strong noise for instance.

Parameters

We are probing the parameter space cons a \(2m\)-dimensional hyper-rectangle \([0,\Omega_{max}]^m\times[\delta_0,\delta_f]^m\) with \(n_r\) training points and a total of \(n_c\) probing points. The optimisation is achieved using gp_minimize from the module scikit-optimize.

[13]:

bounds = [(0.0, Omega_max)] * m + [(delta_0, delta_f)] * m

n_r = 30

n_c = 120

RESULT = gp_minimize(

score, bounds, n_random_starts=n_r, n_calls=n_c, verbose=False

)

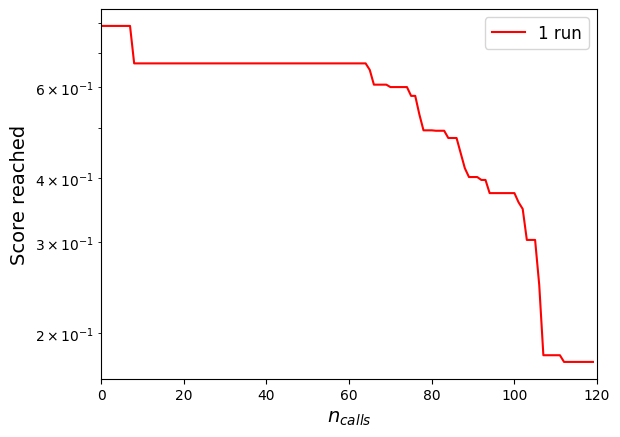

Performance

To ensure that the optimisation is well-designed, i.e. enough but not too many steps, we plot its performance in terms of the minimum score found after \(n_{calls}\) to the score function.

[14]:

def sort_improv(RESULT):

score_vals = RESULT.func_vals

min = score_vals[0]

score_list = []

for s in score_vals:

if s < min:

min = s

score_list.append(min)

else:

score_list.append(min)

return score_list

[15]:

fig = plt.figure()

plt.semilogy(range(n_c), sort_improv(RESULT), "r-")

plt.xlabel(r"$n_{calls}$", fontsize=14)

plt.ylabel("Score reached", fontsize=14)

plt.legend(["1 run"], fontsize=12)

plt.xlim(0, n_c)

plt.show()

Since Bayesian optimisation is a stochastic algorithm, its results may vary between different runs. A 5-run average convergence of the algorithm for the same parameters is shown below. The scores achieved after the total number of calls all remain within an appreciable range.

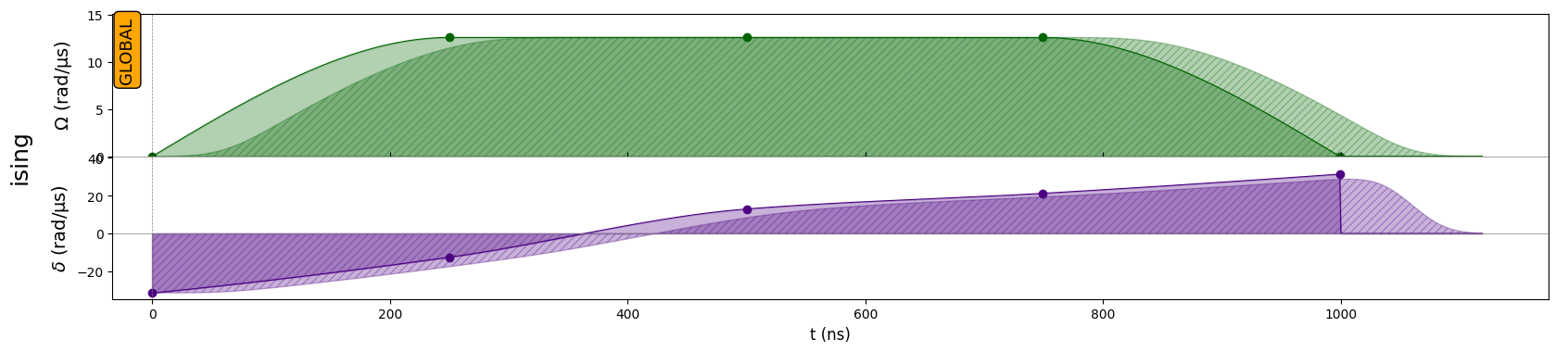

Optimised pulse

An interpolated pulse is produced based on the optimised parameters RESULT.x and then added to a new sequence.

[16]:

seq = Sequence(reg, AnalogDevice)

seq.declare_channel("ising", "rydberg_global")

P = create_interp_pulse(RESULT.x[:m], RESULT.x[m:])

seq.add(P, "ising")

seq.draw()

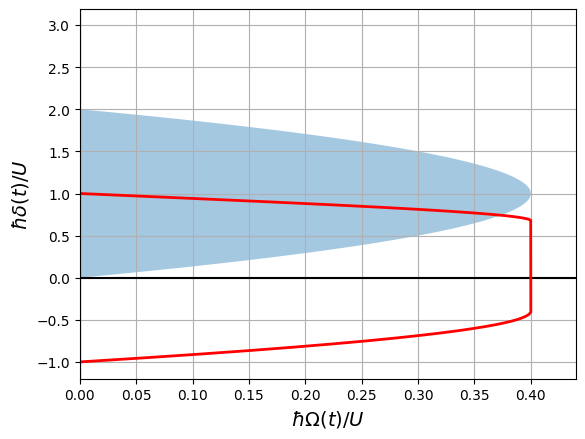

The pulse sequence travels though the following path in the phase diagram of the system (the shaded area represents the antiferromagnetic phase):

[17]:

omega = P.amplitude.samples / U

delta = P.detuning.samples / U

fig, ax = plt.subplots()

ax.grid(True, which="both")

ax.set_ylabel(r"$\hbar\delta(t)/U$", fontsize=14)

ax.set_xlabel(r"$\hbar\Omega(t)/U$", fontsize=14)

ax.set_xlim(0, Omega_max / U * 1.1)

ax.axhline(y=0, color="k")

ax.axvline(x=0, color="k")

y = np.arange(0.0, 3, 0.01)

x = 0.4 * (1 - 1.0 * (y - 1) ** 2)

ax.fill_between(x, y, alpha=0.4)

ax.plot(omega, delta, "red", lw=2)

plt.show()

[18]:

simul = QutipEmulator.from_sequence(seq)

results = simul.run()

print("final =", proba_from_state(results, min_p=0.05))

sampling = results.sample_final_state(N_samples=1000)

sampled_state = sum(

[np.sqrt(sampling[k] / 1000) * qutip.ket(k) for k in sampling.keys()]

)

s_neel_sampled = np.round(get_neel_structure_factor(reg, sampled_state), 3)

print(f"S_Neel (final_sampled) = {s_neel_sampled}")

final = {'010101010101': '35.8%', '101010101010': '39.0%'}

S_Neel (final_sampled) = 10.634

This value should be compared to its equivalent in the tutorial Preparing an AF state in the Ising model. For a ramp pulse with the same parameters and for a square configuration, the structural factor is around \(2.75\) for a maximum of \(24\).

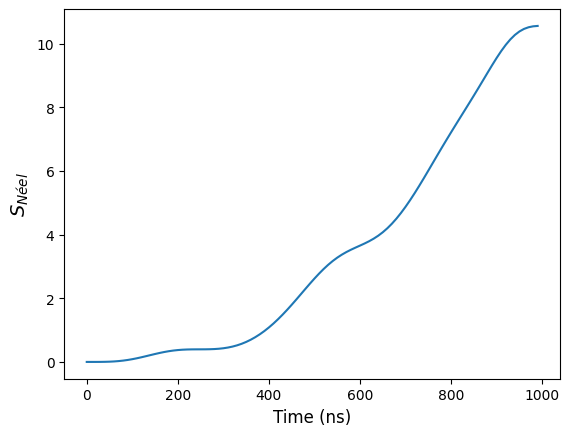

[19]:

# Skip parameter. Only 1 out of 10 pts are kept to enhance the speed.

a = 10

time_domain_reduced = np.arange(T)[::a]

S_Neel_states = [

get_neel_structure_factor(reg, results.states[t])

for t in time_domain_reduced

]

[20]:

plt.figure()

plt.plot(time_domain_reduced, S_Neel_states)

plt.xlabel("Time (ns)", fontsize=12)

plt.ylabel(r"$S_{Néel}$", fontsize=14)

plt.show()

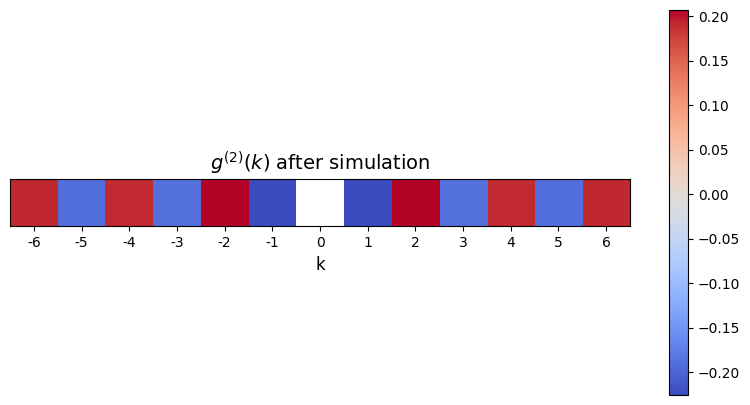

Correlation properties

To identify any AF properties of the final state, it is also possible to examine its correlation function \(g^{(2)}\).

[21]:

final = results.get_final_state()

correlation_function = get_full_corr_function(reg, final)

A = np.reshape(list(correlation_function.values()), (1, N + 1))

A[0, N // 2] = None

plt.figure(figsize=(10, 5))

plt.imshow(A, cmap="coolwarm")

plt.xlabel("k", fontsize=12)

plt.xticks(

range(A.shape[1]), ["{}".format(i) for i in range(-N // 2, N // 2 + 1)]

)

plt.title(r"$g^{(2)}(k)$ after simulation", fontsize=14)

plt.yticks([])

plt.colorbar()

plt.show()

Note that the correlation function would follow an exponential decay (modulo finite-size effects), which is best observed at larger system sizes. A perfect AF state should be as decorrelated as possible, i.e. with a correlation length as great as possible.